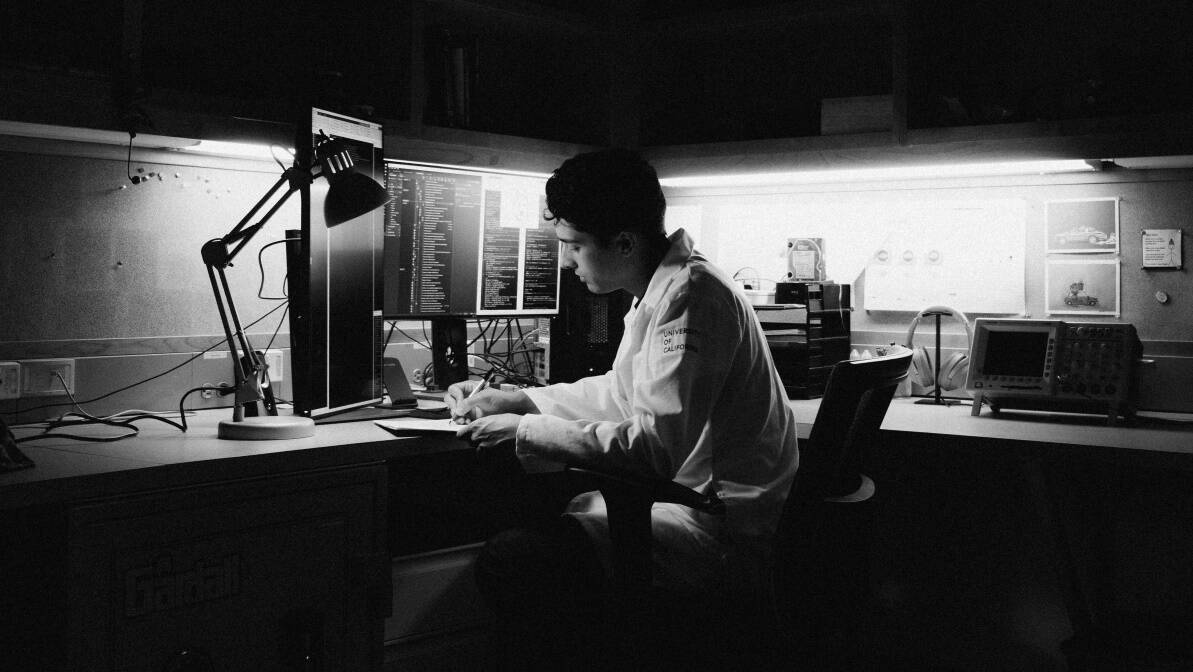

Sumner Norman, chief neuroscientist at AE Studio, talks to Tami Freeman about the company’s work in brain–computer interfaces

A brain–computer interface (BCI) is a system that enables information to flow directly between the brain and an external device such as a computer, smartphone or robotic limb. The BCI includes hardware that records brain signals – most commonly electrical signals generated by neurons in the brain – and software that analyses features in these signals and converts them into commands to perform a desired action. The major application of BCIs is to restore abilities such as movement or communication to people with neurological disorders or injuries.

AE Studio, a data science company based in Los Angeles, is developing machine-learning algorithms that will expand the ability of BCIs to interpret brain activity in real time. Sumner Norman, chief neuroscientist at AE Studio and research scientist at Caltech, tells Physics World about the company’s mission to create software that increases human agency.

Can you describe the basic ideas behind the BCI?

When neurons in your brain are active they create electromagnetic fields and changes in haemodynamics, which provide a few different sources of contrast. If you have a way to interact with those physiological mechanisms, you can extract information from the brain.

The brain contains up to 80 billion neurons, which have up to a thousand synapses each. It’s an incredibly complex piece of machinery. So interpreting the extracted signals is actually a really complex problem. We use machine-learning algorithms to decode the information. But once you have interpreted the brain activity then you can use it. This could be as simple as controlling a cursor on a computer screen, or you can scale up to interacting with robotic limbs, anything the mind can imagine.

What is the main focus of current BCI research?

In recent years, efforts have started to translate out of academia and into industry. One line of commercial research is centred around the electrode-based technologies that sense electrical activity from the brain.

Often the most high-performance BCIs are intracortical, where the electrodes are implanted into the cortex itself. One company, Synchron, is investigating how to get electrodes into the brain by inserting a stent into the existing vasculature, which then snakes its way up into the brain and implants itself close to the motor cortex. Neuralink, Elon Musk’s company, is using very fine wire electrodes to create a fully implantable, fully wireless system with an extremely high channel count.

On the academic side, researchers are developing new sensing modalities, moving away from implanted electrodes. Optically pumped magnetometry, for example, senses the magnetic field generated by large populations of neurons firing together. Some of my research has involved the use of ultrasound to detect the motion of individual red blood cells in response to neural activity.

AE Studio recently announced a collaboration with Blackrock Neurotech, which aims to release the first commercial BCI platform next year. What is Blackrock developing?

Blackrock uses the Utah array, an electrode array that’s implanted in the brain, which first came out of the University of Utah. Over the last 20 years it has been developed within academia, with a long track record of safety and success. Blackrock’s goal is to package this into something that people with severe forms of paralysis – late-stage ALS, spinal cord injury and so on – can use to control computerized devices, such as a cursor on a screen.

Decoding an intended direction – moving a joystick to type letters on a screen – can be frustrating and slow. One interesting application, which came out of a group at Stanford, decodes imagined handwriting. Paralysed patients, who sometimes haven’t been able to move for decades, will attempt to write as they remember writing before they were paralysed. And the BCI device can decode that information almost as fast as you or I could write. This is an incredibly fast form of communication.

How is AE Studio involved in this project?

Academics come up with wonderful ideas and we want to bring those ideas into a reliable BCI that potentially millions of people can come to use and trust. We use good data science practices to improve device performance, but we also want to improve the user experience. This includes faster and less frequent calibration, so people spend more time handwriting, for example, and less time training the decoder.

We also need models that adapt quickly to the user’s brain changing or developing new skills. This is a big problem in BCI, that models need to be constantly recalibrated. AE Studio is well positioned to stabilize those models over long periods of time.

Are these examples of “creating software to increase human agency”?

Yes, that’s exactly right. In the near term, what we mean by increasing human agency is restoring function to people who have lost agency over the control of their body. But as with every new technology, BCIs come with possible downsides, and we want to be aware of these even early on.

For example, these machine-learning algorithms benefit from training with huge amounts of data from many people. But how do you pool knowledge gained from thousands or millions of users without compromising their privacy? So we’re thinking up clever ways of protecting user data, while also being able to share these complex models and update them with community use cases.

We want to work with the community, including academics, commercial groups and users themselves, to create devices where user data is encrypted and kept behind firewalls and ideally never even leaves the person’s device. These are the sorts of principles that we’re putting together now and just getting started on what that means in software.

And how could developments in BCI hardware benefit people?

These days, electrodes come with some risks, as implanting them in the brain itself can damage brain tissue. Ideally, we’d be outside of the brain entirely and specifically outside of the dura mater, the brain’s protective membrane.

If you can make the BCI surgery so simple that it’s effectively plugging a hole in the skull but never touching the brain, it becomes a short outpatient procedure and looks a lot less like brain surgery. That, I think, is where future applications start to expand, because then the user base grows beyond just people who have severe forms of paralysis. Once you get to these kinds of scenarios, then even mild to moderate improvements in quality-of-life start to make a lot of sense.

What other BCI applications might be introduced clinically in the next few years?

In the short term, the big move forward is to higher channel counts. Remember those 80 billion neurons? A Utah array only records from maybe a couple of hundred neurons at a time. Being able to record from tens or hundreds of thousands of neurons opens up much bigger possibilities around the type of fidelity for motor intention that you can decode. Decoding intended speech, for example, is right on the cusp of something that we can do quite well today, but it’s not quite ready for commercialization.

In the medium term, there are new, less invasive technologies. Transcranial magnetic stimulation is starting to grow in clinical adoption and efficacy, for treating depression, for example. One company is doing electroencephalography (EEG)-based motor rehabilitation for people who’ve had a stroke – this is a non-invasive BCI.

In the long term, you could create nanomaterials that move through the body or bloodstream, or combine BCIs with genetic and molecular engineering. You could amplify the ability of these technologies so that instead of sensing a broad signal, they start to sense individual cells or cell types associated with certain diseases. And they could do that throughout the entire brain, rather than just in the tiny scope of an electrode. Now you start to open up the ability to deliver drugs to targeted regions with no side effects. The possibilities become endless. And that’s really the most exciting thing about neurotechnology – we’re just at the beginning.