Although Marvel Comics’ portrayal of telekinesis may frighten some, long-range control of objects or machines may eventually be realizable through brain–machine interface technology. Such technologies involve connecting external devices with electrical outputs from the brain to facilitate communication between humans and computers. Recently, a team of researchers from South Korea, the UK and the US developed soft scalp electronics for real-time brain interfacing and motor image acquisition. Their study, published in Advanced Science, details the design and application of this device.

The leading technique for non-invasive acquisition of the brain’s electrical activity is electroencephalography (EEG), which measures brain activity via scalp-mounted electrodes. Unfortunately, these electrodes need to be attached by (an often bulky and uncomfortable) hair cap with extensive wiring. Moreover, EEG typically requires conductive gels or pastes to minimize the effects of motion artefacts and electromagnetic interference.

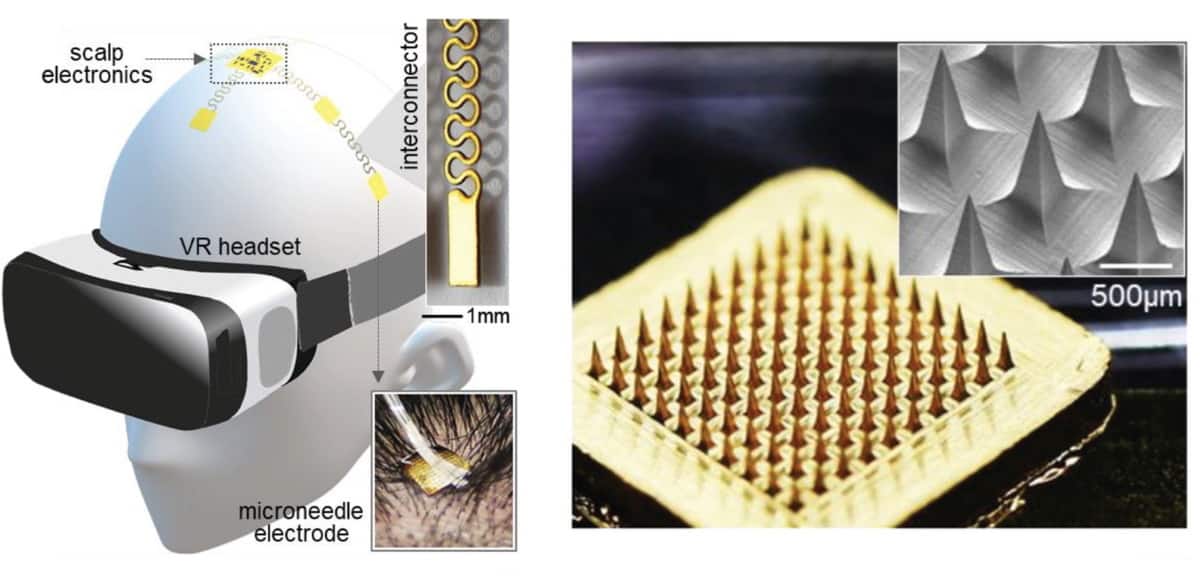

To address these shortfalls, the research team – headed up by Woon-Hong Yeo at Georgia Institute of Technology – has developed a portable EEG system that’s soft and comfortable to wear. The new device uses an array of flexible microneedles that acts as an electrode to detect brain signals. These signals feed through a stretchable connector into a wireless circuit, which filters and processes the inputs in real-time.

The gold-plated microneedle electrode provides biocompatibility and excellent contact with the scalp. Mechanical testing demonstrated the array’s impressive durability, yielding a low resistive change after a series of 100 bends. The microneedles themselves also showed mechanical robustness, with their shape and electrical coating remaining intact after 100 insertions of the needles into porcine skin.

To test the device’s efficacy in classifying brain signals, the team paired it with a virtual reality video game and a machine learning algorithm. In the video game, four volunteers responded to visual cues to perform motor imagery tasks every four seconds. By recording the brain’s activity, the convolutional neural network interpreted the input to determine the subject’s intended tasks. The system achieved high accuracy, correctly classifying 93% of inputs at a high information transfer rate of 23 bit/min, allowing real-time wireless control of the game.

The researchers conclude that this motor imagery technique offers significant potential to act as a general-purpose brain–machine interface. They note the need for further work in optimizing the placement of electrodes to maximize the number of functional imagery classes while maintaining high classification accuracy. The group believes that, in time, the technology may offer a solution for individuals suffering from paralysis, brain injuries or disorders such as locked-in syndrome.

Perhaps Stan Lee had a premonition of brain interface technology when he first depicted Wanda Maximoff’s telekinetic powers back in 1964…