Physicists should be wary of data from gravitational-wave observatories that appear to contradict Einstein’s general theory of relativity. That is the message from researchers in the UK, who have analysed how errors accumulate when combining the results from multiple black-hole mergers. They say that current gravitational-wave catalogues contain nearly enough events to potentially generate errors large enough to be confused with signals for alternative theories of gravity.

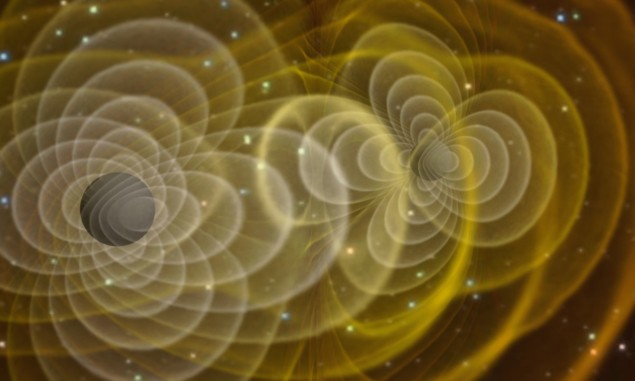

The discovery of gravitational waves by the LIGO collaboration in the US in 2015 was one of the most important vindications of Einstein’s general theory of relativity. That theory, formulated a century earlier, predicts that massive, accelerating objects will generate wave-like distortions in space-time that radiate away from them. The waves are miniscule, but LIGO and other laser interferometers are now sensitive enough to pick up the distinctive waveforms from certain pairs of massive celestial objects. In the case of the first detection and most others since, the objects in question were two merging black holes.

Ironically, however, physicists also hope that gravitational waves might reveal flaws in general relativity. They strongly suspect that the theory does not provide a complete description of gravitational interactions, given its incompatibility with quantum mechanics. To this end, researchers make detailed comparisons between the waveforms of gravitational radiation picked up by interferometers and those predicted by general relativity – with any inconsistencies between the two signaling a possible hole in the theory.

As Christopher Moore and colleagues at the University of Birmingham point out, all detections to date have been consistent with general relativity. But the scrutiny will intensify as LIGO and its European counterpart – the Virgo detector in Italy – become more sensitive, and other observatories start up elsewhere. Indeed, it might become possible to identify features in the observed waveforms that discriminate between general relativity and alternative theories, such as ones motivated by quantum gravity.

Combining data

Doing so with individual events is limited by the strength of the signal in each case. But as the number of events increases – to date there have been about 50 binary systems observed – researchers are looking to combine the data from them and thereby perform more stringent tests.

In the latest work, Moore and colleagues sought to establish the possible extent of systematic errors when such multi-event analyses are carried out. Their results, they say, surprised them – finding that small model errors can accumulate faster than expected when combining events together in catalogues.

As the researchers explain in a paper published in the journal iScience, modelling the waveforms from specific celestial phenomena is a complex business. As such, they say, several simplifications have to be imposed to make the calculations manageable. These include the removal of higher-order mathematical terms and the need to ignore certain physical effects – such as those deriving from black holes’ spin and orbital eccentricity. Even then, they say, finite computing power limits the calculations’ accuracy.

Additional parameters

Using a simple method of data analysis that assumes the signal-to-noise ratio is very large, Moore and colleagues found that the extent of error accumulation depends on how individual gravitational-wave events are combined. In other words, how additional parameters are added to the equations of general relativity. On one hand, are parameters that would be common to all events, such as the mass of the hypothetical force particle known as the graviton. On the other hand, are parameters whose values can change from one event to the next – such as “hairs” on black holes.

In addition, say the researchers, error accumulation depends on how modelling errors are distributed across catalogue events and how they align with different deviations from relativity – whether they always tend to push the deviation in the same direction or whether they instead cause it to average out.

“Dangerously close”

Moore and colleagues conclude that even if a waveform model is good enough to analyse individual events, it may still create erroneous evidence for physics beyond general relativity “with arbitrarily high confidence” when applied to a large catalogue. In particular, they calculate that such a false signal could emerge from as few as between 10–30 events with a signal-to-noise ratio of at least 20. That, they write, “is dangerously close to the size of current catalogues”.

The researchers acknowledge that more work needs to be done to gauge the reliability of such multiple-event analyses. In particular, they say it will be necessary to test the statistical procedures involved with simulated as opposed to real data.

“Excellent starting point”

Other scientists welcome the new research. Nicolás Yunes of the University of Illinois Urbana-Champaign in the US says the problem of mistaking errors for new physics has been known about for some time but reckons the study represents “an excellent starting point to continue investigating this potential problem and determine how to overcome it”.

Katerina Chatziioannou of the California Institute of Technology in the US argues that although waveform models are “good enough” for existing gravitational-wave data it remains to be seen whether they are up to the job in the future. Nevertheless, she says, researchers are “actively working to improve the models”.

Indeed, Emanuele Berti of the Johns Hopkins University, also in the US, is optimistic that a “self-correcting process” will take place. “As we learn waveforms and astrophysical properties of the events,” he says, “we should be able to correct for the effects pointed out in the paper.”