An artificial neural network that can identify regions of COVID-19 lung infection in CT scans, despite being trained only on images of healthy patients, has been developed by researchers in the UK and China. The tool can also be used to generate pseudo data to retrain and enhance other segmentation models.

One of the most significant health crises of the last century, the COVID-19 pandemic is estimated to have infected more than 250 million individuals worldwide since it first emerged back in December 2019. As we have all learnt over the last two years, the successful suppression of coronavirus transmission is dependent on effective testing and quarantine of infected individuals.

CT scanning is an important diagnostic tool — and one that has the potential to stand in as both an alternative to reverse transcription polymerase chain reaction (RT-PCR) tests where such are limited, as well as providing a way to screen for PCR false negatives.

The problem with such uses of CT scans, however, is that they are dependent on having trained radiologists to interpret them — and increasing demand in outbreak hotspots would add more strain to local medical services. One solution lies in the application of deep learning-based artificial intelligence to analyse the images, which could help clinicians screen for COVID-19 faster and also lower the expertise level needed to do so.

While various studies have shown the potential for deep learning to aid with classification of the disease on CT images, few have examined its capacity to automate the localization of disease regions. A challenge with using deep learning to complete this task, however, comes in the scarcity of annotated datasets on which to train neural networks.

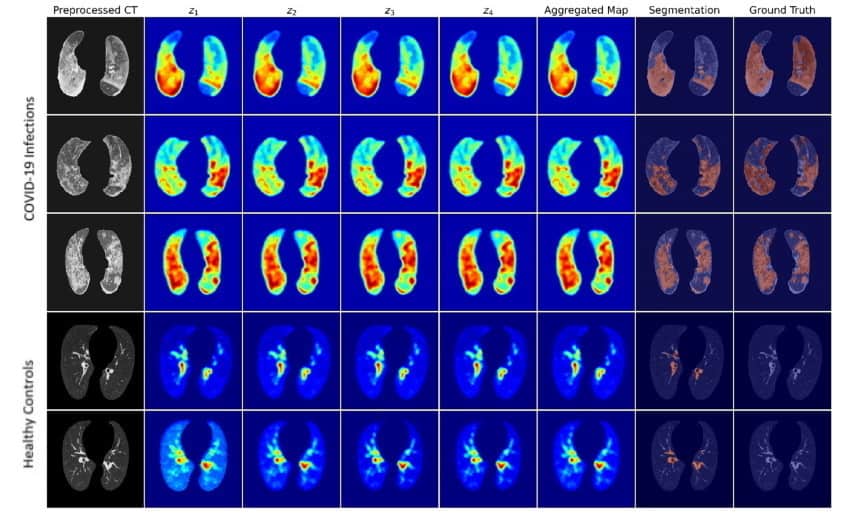

In their study, computer scientist Yu-Dong Zhang of the University of Leicester and colleagues propose a new weakly supervised deep-learning framework dubbed the “Weak Variational Autoencoder for Localisation and Enhancement”, or “WVALE” for short. The framework includes a neural network model named “WVAE”, which uses a gradient-based approach for anomaly localization. WVAE works by transforming and then recovering the original data in order to learn about their latent features, which then allows it to identify anomalous regions

The team’s approach is “weakly supervised” because they used only healthy control images to train their WVAE model — rather than a mixture of CT scans from healthy and coronavirus-infected patients. The study’s main dataset comprised CT scans from 66 patients diagnosed with COVID-19 at the Fourth People’s Hospital in Huai’an, China and 66 healthy medical examiners as controls.

The researchers found that the WVAE model is capable of producing high-quality attention maps from the CT scans, with fine borders around infected lung regions, and segmentation results comparable to those produced by many conventional, supervised segmentation models —outperforming a range of existing weakly supervised anomaly localization methods. Furthermore, they were able to go on to use pseudo data generated by this model to retrain and enhance other segmentation models.

Bridging the knowledge gap on AI and machine-learning technologies

“Our study provides a proof-of-concept for weakly supervised segmentation and an alternative approach to alleviate the lack of annotation, while its independence from classification and segmentation frameworks makes it easily integratable with existing systems,” says Zhang.

With their initial study complete, the researchers are now looking to explore bringing different methods to bear on their framework.

“While the gradient-based method provides good anomaly localization performance in our case, it’s a post-hoc method — which attempts to interpret models after they’re trained — and WVAE is still a black box,” Zhang explains. “We plan to look at exploring methods that can perhaps make WVAE inherently interpretable and exploit that interpretability for tasks like anomaly localization and generating pseudo segmentation data.”

The study is described in Computer Methods and Programs in Biomedicine.

![]() AI in Medical Physics Week is supported by Sun Nuclear, a manufacturer of patient safety solutions for radiation therapy and diagnostic imaging centres. Visit www.sunnuclear.com to find out more.

AI in Medical Physics Week is supported by Sun Nuclear, a manufacturer of patient safety solutions for radiation therapy and diagnostic imaging centres. Visit www.sunnuclear.com to find out more.